Serverless video processing pipeline using aws and hls

Introduction

Streaming video through the web has always been an interesting problem. Cisco predicted that video traffic will contribute to 80% of all internet traffic in 2021. Nearly 1 billion hours of video are streamed on youtube every day and Platforms like Netflix and Amazon Prime Video heavily rely on video streaming to deliver their entertainment platform. While traditional streaming methods used a single video file and html5 video player, today's techniques are much more complex and efficient. Adaptive Bitrate streaming is the most commonly used technique today to stream video over the web.

Adaptive Bitrate Streaming (ABR)

ABR is a technique used for streaming videos over HTTP where the source content is encoded at multiple bit rates and the client can automatically or manually switch to different qualities based on available network bandwidth. Streaming giants like Youtube and Netflix use ABR to adapt to their client's network bandwidth and automatically switch to the right video quality. When a video is uploaded to youtube multiple copies of the same video are made, each with different bitrates and the client can manually or automatically switch between different bitrates. Bitrates refer to the number of bits a video outputs per second. The more the bitrate, the more detailed the video will be. Several streaming protocols implement Adaptive Bitrate Streaming. Some of them are:-

- HTTP Live Streaming (HLS)

- Dynamic Adaptive Streaming over HTTP (DASH)

- Microsoft Smooth Streaming (MSS)

- Adobe HTTP Dynamic Streaming (HDS)

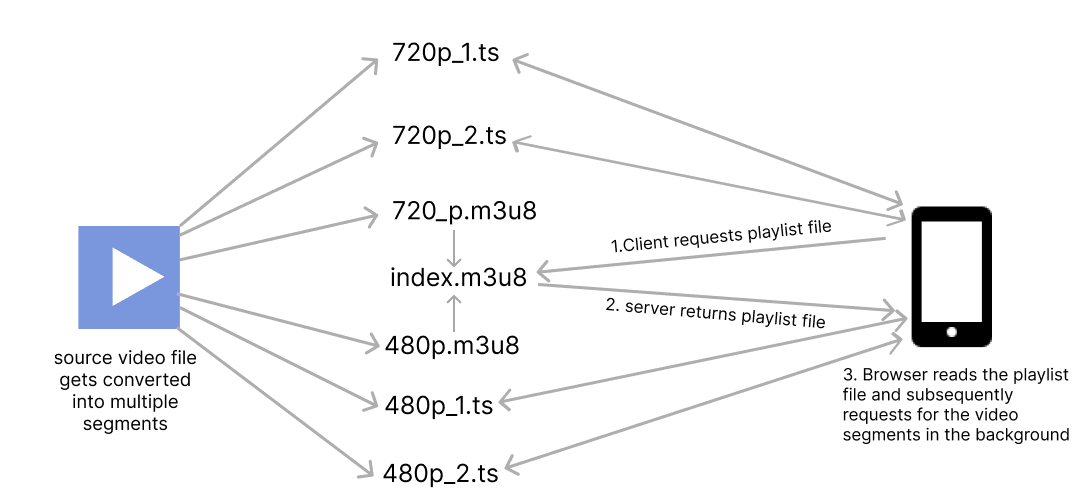

Most ABR protocols work by dividing the video file into multiple segments of different bitrates and using a manifest file to tell the client where to find each segment. The reason the source video is divided into multiple segments of different bitrates instead of a single file of different bitrates is that it will be much easier to handle the video on the client-side. In this tutorial, we will be using HTTP Live Streaming (HLS) protocol to make videos suitable for ABR streaming. Before jumping to the tutorial let's get to know more about HLS. If you want to directly jump to the tutorial, click here.

HTTP Live Streaming (HLS)

HTTP Live Streaming (HLS) is an HTTP-based media streaming protocol developed by Apple. It was initially developed for video streaming on iPhone and is currently supported by many browsers. The way HLS works is by converting the source video into a configurable number of segment files with an extension of '.ts' and using an m3u8 file as a manifest file. This file is also called a playlist file. HLS has support for live streaming and ad injection.

Playlist files and segments

An m3u8 file is an extended version of an m3u file which is a plain text file format originally created to organize mp3 playlists. It is saved with the m3u8 extension and is composed of a set of tags. A hls segment contains a part of the source video and has an extension of ts. The playlist file contains metadata and URL to the different segments. The recommended duration for a segment is between 4 to 5 seconds.

1// 720p.m3u8Copy2 #EXTM3U // all hls playlist files must start with this tag3 #EXT-X-VERSION:3 // version4 #EXT-X-TARGETDURATION:5 // maximum duration of a segment5 #EXT-X-MEDIA-SEQUENCE:0 // indicates the sequence of the first url, usually 06 #EXT-X-PLAYLIST-TYPE:VOD // stands for video on demand, used to differentiate from a live stream7 #EXTINF:4.804800, // duration of the segment file below8 720p_0.ts // this is the segment url, since we are putting every file in a single folder this is relative to the folder where the playlist file resides. It can also be absolute like https://yourcdn.com/videofolder/720p_0.ts9 #EXTINF:3.203200,10720p_1.ts11#EXTINF:4.804800,12720p_2.ts13#EXTINF:3.203200,14720p_3.ts15#EXTINF:4.804800,16720p_4.ts17#EXTINF:3.203200,18720p_5.ts19#EXTINF:4.337667,20720p_6.ts21#EXT-X-ENDLIST // indicates that no more media files will be added to the playlist file

Dynamic Adaptive Streaming over HTTP (DASH) uses a similar configuration with .m4s extension for the segments and XML for the manifest file

For adaptive bitrate streaming, The source video file needs to be converted to segments of all the bitrates for different resolutions like 360p, 480p, 720p and 1080p and for each bitrate, we will create a separate playlist file linking its corresponding segments and finally link all the playlist file using a single index.m3u8 file which will be read by the browser. We will be putting all the playlist files and segments for a particular video under a single folder. This is only one of the many ways by which you can organize the playlist files and segments. However you organize your segments and playlists, the URL field of every segment defined in the playlist file should be reachable by the client.

In the case of a live stream, the segments should be dynamically created and the playlist file should be updated accordingly.

1// index.m3u8Copy2 #EXTM3U3 #EXT-X-VERSION:34 #EXT-X-STREAM-INF:BANDWIDTH=800000,RESOLUTION=640x360 // maximum bandwidth and resolution required in bits per second for the client to play the playlist below.5 360p.m3u8 // playlist file containing reference to segments for above bandwidth and resolution, can also be absolute like https://yourcdn.com/videofolder/360p.m3u86 #EXT-X-STREAM-INF:BANDWIDTH=1400000,RESOLUTION=842x4807 480p.m3u88 #EXT-X-STREAM-INF:BANDWIDTH=2800000,RESOLUTION=1280x7209 720p.m3u810#EXT-X-STREAM-INF:BANDWIDTH=5000000,RESOLUTION=1920x1080111080p.m3u8

We can use ffmpeg to encode and generate the segments and playlist files. FFmpeg supports HLS encoding out of the box and will automatically set the tags and create the playlist files for us. For a full list of supported tags, refer to Apple's documentation

HLS on the browser

As of the time of writing, HLS is natively supported only on a few browsers. Mainstream browsers like chrome and firefox are yet to add native support for HLS playback. But this doesn't mean that we can't play HLS on those browsers, HLS can be used with a javascript library in browsers that doesn't support it natively as long as they support Media Source Extensions(MSE). Media Source Extensions is a standard javascript API that allows customization of the input to media elements such as the html5 <video> tag using the MediaSource object. The MediaSource object contains references to multiple SourceBuffer objects that represent the different chunks of media that make up the entire stream. Hence, the client can progressively fetch the different segments using the native fetch API and fill the different SourceBuffers for different bitrates and finally, this SourceBuffer is fed into the video element using MediaSourceExtension Object for playback. The quality is changed by switching between different SourceBuffers. All this seems like a lot of work and thankfully there are lots of battle-tested libraries out there that will do all of the work for us. Popular libraries include videojs, Google's shakaplayer and hls.js.

Video processing pipeline using AWS

Now that we have an idea about what HLS is, Let's implement a video processing pipeline using AWS that takes in a source video, makes it suitable for HLS streaming and streams it to users using above mentioned videojs library.

AWS service we will be using:-

- S3 - to store source video and output video

- Lambda - to convert the video into different formats using FFmpeg

- Step Functions - to coordinate between lambda functions

- DynamoDB - to store video metadata

- Cloudfront - to distribute video to users

We will be also using serverless framework for deploying the infrastructure, FFmpeg to convert video into HLS, Nodejs to write lambda functions and videojs on the client side.

What we will be making

You can find the github repository here

AWS Architecture

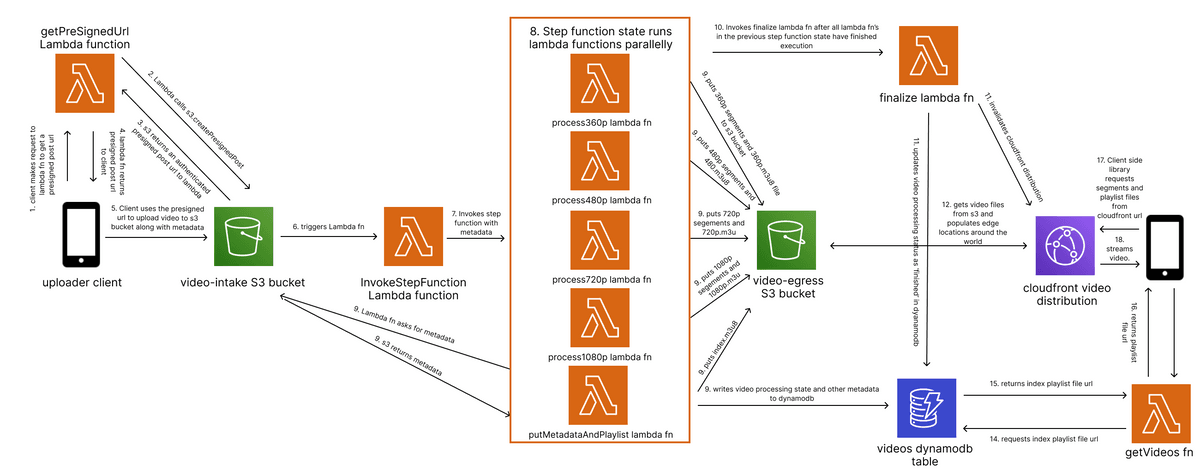

The flow of logic of our app is as follows:-

1. Client makes request to getPreSignedUrl lambda function

We can use a feature of AWS s3 called presigned urls to directly upload our source video file to s3. You can use pre-signed URLs to access a private object or upload objects to a private bucket. The client requests the getPreSignedUrl lambda function to get an authenticated URL from s3. The key of the video object is set by the getPreSignedUrl lambda function using the crypto module in nodejs. This key will be used to uniquely identify a video. Additional metadata sent from the client can be attached as key-value pairs in the s3 object. Do note that getPreSignedUrl needs s3:PutObject permission to get a pre-signed post url.

getPreSignedUrl.js lambda function

1const S3 = require('aws-sdk/clients/s3'); // no need to install aws-sdk, available without installing for all nodejs lambda functionsCopy2const crypto = require('crypto');34const s3 = new S3({5 region: 'ap-south-1',6});78const createPresignedUrl = metaData => { // metadata can contain additional info send from the client9 const params = {10 Fields: {11 key: crypto.randomBytes(8).toString('hex'), // returns a random string12 'x-amz-meta-title': metaData.title, // setting object metadata, has to be in the form x-amz-meta-yourmetadatakey13 },14 Conditions: [15 ['starts-with', '$Content-Type', 'video/'], // accept only videos16 ['content-length-range', 0, 500000000], // max size in bytes, 500mb17 ],18 Expires: 60, // url expires after 60 seconds19 Bucket: 'video-intake',20 };21 return new Promise((resolve, reject) => {22 s3.createPresignedPost(params, (err, data) => {23 // we have to promisify s3.createPresignedPost because it does not have a .promise method like other aws sdk methods24 if (err) {25 reject(err);26 return;27 }28 resolve(data);29 });30 });31};3233const getPreSignedUrl = async event => {34 try {35 const data = await createPresignedUrl(JSON.parse(event.body));36 return {37 statusCode: 200,38 body: JSON.stringify({39 data,40 }),41 };42 } catch (err) {43 return {44 statusCode: 500,45 body: JSON.stringify({46 message: 'Internal server error',47 }),48 };49 }50};5152module.exports = {53 handler: getPreSignedUrl,54};

on the client-side, we need to pack our video file along with the authentication fields returned to us by getPreSignedUrl lambda fn using formData object and make a post request to s3.

app.js

1async function handleSubmit(e) { // runs when you click upload buttonCopy2 e.preventDefault();34 if (!file.files.length) {5 alert('please select a file');6 return;7 }89 if (!title.value) {10 alert('please enter a title');11 return;12 }1314 try {15 const {16 data: { url, fields },17 } = await fetch('https://ve2odyhnhg.execute-api.ap-south-1.amazonaws.com/getpresignedurl', {// your url might be different from this, replace it with api gateway endpoint of your lambda function18 method: 'POST',19 headers: {20 'Content-Type': 'application/json',21 Accept: 'application/json',22 },23 body: JSON.stringify({ title: title.value }), // send other metadata you want here24 }).then(res => res.json());2526 const data = {27 bucket: 'video-intake',28 ...fields,29 'Content-Type': file.files[0].type,30 file: file.files[0],31 };3233 const formData = new FormData();3435 for (const name in data) {36 formData.append(name, data[name]);37 }3839 uploadStatus.textContent = 'uploading...';40 await fetch(url, {41 method: 'POST',42 body: formData,43 });44 uploadStatus.textContent = 'successfully uploaded';45 } catch (err) {46 console.error(err);47 }48}

2. S3 upload triggers stepfunction

The video-intake s3 bucket is configured to invoke the invokeStepFunction Lambda function whenever a new object is uploaded to the bucket through event notifications which inturns invoke the step function. We are using an extra lambda function to invoke the step function because as of writing s3 event notifications only support invoking lambda functions, SQS and SNS. You can also use EventBridge to trigger other AWS services from s3.

invokeStepFunction.js lambda function

1const AWS = require('aws-sdk');Copy23AWS.config.update({4 region: 'ap-south-1',5});67const stepFunctions = new AWS.StepFunctions();89const invokeStepFunction = (event, context, callback) => {10 const id = event.Records[0].s3.object.key; // event object contains payload from s3 trigger11 try {12 const stateMachineParams = {13 stateMachineArn: process.env.STEP_FUNCTION_ARN,14 input: JSON.stringify({ id }), // input to each lambda function in the parallel step function state15 };16 stepFunctions.startExecution(stateMachineParams, (err, data) => { // starts step function execution17 if (err) {18 console.error(err);19 const response = {20 statusCode: 500,21 body: JSON.stringify({22 message: 'There was an error',23 }),24 };25 callback(null, response);26 } else {27 console.log(data);28 const response = {29 statusCode: 200,30 body: JSON.stringify({31 message: 'Step function worked',32 }),33 };34 callback(null, response);35 }36 });37 } catch (err) {38 console.error(err);39 }40};4142module.exports = {43 handler: invokeStepFunction,44};

3. Step function execution

It is inside the step function where the actual video processing tasks take place. We are using a parallel state to execute our lambda functions parallelly. The first 4 lambda functions are responsible for taking the source video file from s3 and converting it into corresponding bitrates. Inside the Lambda function, we are using the node-fluent-ffmpeg package to convert the source video into a particular bitrate and make it suitable for HLS streaming by dividing it into different segments. The output of the video conversion process is uploaded to the video-egress bucket.

process360p lambda function

1const S3 = require('aws-sdk/clients/s3');Copy2const ffmpeg = require('fluent-ffmpeg');3const fs = require('fs');45const s3 = new S3({6 region: 'ap-south-1',7});89const process360p = event => {10 const id = event.id; // data from the invokeStepFunction lamda function11 const params = { Bucket: 'video-intake', Key: id };12 const readStream = s3.getObject(params).createReadStream(); // create s3 readStream13 var totalTime;1415 fs.mkdirSync(`/tmp/${id}`);1617 ffmpeg(readStream)18 .on('start', () => {19 console.log(`transcoding ${id} to 360p`);20 })21 .on('error', (err, stdout, stderr) => {22 console.log('stderr:', stderr);23 console.error(err);24 })25 .on('end', async () => {26 const fileUploadPromises = fs.readdirSync(`/tmp/${id}`).map(file => {27 let params = { Bucket: 'video-egress', Key: `${id}/${file}`, Body: fs.readFileSync(`/tmp/${id}/${file}`) };28 console.log(`uploading ${file} to s3`);29 return s3.putObject(params).promise();30 });31 await Promise.all(fileUploadPromises); // upload output to s332 await fs.rmdirSync(`/tmp/${id}`, { recursive: true });33 console.log(`tmp is deleted!`);34 })35 .on('codecData', data => {36 totalTime = parseInt(data.duration.replace(/:/g, ''));37 })38 .on('progress', progress => {39 const time = parseInt(progress.timemark.replace(/:/g, ''));40 const percent = Math.ceil((time / totalTime) * 100);41 console.log(`progress :- ${percent}%`);42 })43 .outputOptions(['-vf scale=w=640:h=360', '-c:a aac', '-ar 48000', '-b:a 96k', '-c:v h264', '-profile:v main', '-crf 20', '-g 48', '-keyint_min 48', '-sc_threshold 0', '-b:v 800k', '-maxrate 856k', '-bufsize 1200k', '-f hls', '-hls_time 4', '-hls_playlist_type vod', `-hls_segment_filename /tmp/${id}/360p_%d.ts`])44 .output(`/tmp/${id}/360p.m3u8`) // output files are temporarily stored in tmp directory45 .run();46};4748module.exports = {49 handler: process360p,50};

here we are using nodejs streams to stream the source video file from s3 to the fluent-ffmpeg library and temporarily storing the output data on lambda's temp storage. After finishing the conversion process, it is uploaded to another s3 bucket (video-egress)

We are using lambda layers to provide the lambda runtimes with ffmpeg binaries and fluent-ffmpeg library.

In a production environment, you may want to include error handling and retry mechanisms inside step-functions if any of the lambda functions fails for any reason.

Also note that in step functions, the return value of a function will be input to the next step function. That's how we pass the id of the video around lambda functions. The input can be accessed inside the event parameter of a lambda function.

FFmpeg options

We can provide configuration flags to ffmpeg using fluent-ffmpeg's outputOptions method. The recommended configurations are taken from here

-vfscaling factor used to set the output resolution-c:a aacsets the audio codec to aac-ar 48000sets the audio sampling rate to 48khz-b:a 96ksets the audio bitrate to 96kbits/s-c:v h264sets the video codec to h264-profile:v mainsets h264 profile to main - this means support in modern devices-crf 20constant rate factor, overall quality-g 48 -keyint_min 48create key-frames every 48 frames-sc_threshold 0don't create keyframes on scene change - only according to -g-b:v 800ksets video bitrate to 800kbits/s-maxrate 856kmaximum video bitrate allowed-f hlssets the encoding type to hls-hls_time 4sets the duration of hls segment-hls_playlist_type vodsets hls playlist type to video on demand-hls_segment_filename /tmp/${id}/360p_%d.tsoutputs each segment to tmp/id. Id is the key of the video which uniquely identifies a video. '%d' is an iterator that starts at 0.

the output function of the fluent-ffmpeg library accepts a path to where the playlist file should be saved.

process480p,process720p and process1080p lambda functions is very similar to the process360p. The only difference is in the resolution, audio and video bitrates provided to ffmpeg configuration. For a full list to recommended bitrates for each resolution, check here.

Finally, the putMetadataAndPlaylist lambda function gets object metadata from the s3 bucket and writes to dynamodb table. The object metadata contains a status field which will be set by the function as 'processing'. This function also writes the index.m3u8 file to the output bucket.

putMetadataAndPlaylist lambda function

1const AWS = require('aws-sdk');Copy23AWS.config.update({4 region: 'ap-south-1',5});67const s3 = new AWS.S3();8const dynamoDb = new AWS.DynamoDB.DocumentClient();910const putMetadataAndPlaylist = async event => {11 const id = event.id;12 try {13 const s3Params = {14 Bucket: 'video-intake',15 Key: id,16 };1718 const data = await s3.headObject(s3Params).promise(); // gets metadata from s31920 const dynamoParams = {21 TableName: 'videos',22 Item: {23 id,24 title: data.Metadata.title,25 url: `https://${process.env.CLOUDFRONT_DOMAIN}/${id}/index.m3u8`,26 status: 'processing',27 },28 };2930 await dynamoDb.put(dynamoParams).promise(); // writes metadata to dynamoDb31 console.log('Successfully written metadata to dynamoDb');3233 const content = `#EXTM3U34#EXT-X-VERSION:335#EXT-X-STREAM-INF:BANDWIDTH=800000,RESOLUTION=640x36036360p.m3u837#EXT-X-STREAM-INF:BANDWIDTH=1400000,RESOLUTION=842x48038480p.m3u839#EXT-X-STREAM-INF:BANDWIDTH=2800000,RESOLUTION=1280x72040720p.m3u841#EXT-X-STREAM-INF:BANDWIDTH=5000000,RESOLUTION=1920x1080421080p.m3u8`;4344 await s3.putObject({ Bucket: 'video-egress', Key: `${id}/index.m3u8`, Body: content }).promise(); // writes index.m3u8 to output bucket4546 console.log('Successfully written index playlist to s3');4748 return { id };49 } catch (err) {50 console.error(err);51 }52};5354module.exports = {55 handler: putMetadataAndPlaylist,56};

4. The finalize lambda function

The finalize lambda function is the last task of the step function and will only be executed after the parallel task has finished executing. It does two things.

- Change the status to

finishedin video metadata inside dynamo DB table - Invalidate CloudFront distribution

finalize lambda function

1const AWS = require('aws-sdk');Copy23AWS.config.update({4 region: 'ap-south-1',5});67const dynamoDb = new AWS.DynamoDB.DocumentClient();8const cloudfront = new AWS.CloudFront();910const finalize = async event => {11 try {12 const id = event[4].id;1314 const dynamoParams = {15 TableName: 'videos',16 Key: {17 id,18 },19 UpdateExpression: 'set #videoStatus = :x',20 ExpressionAttributeNames: { '#videoStatus': 'status' }, // because status is reserved keyword in dynamoDb21 ExpressionAttributeValues: {22 ':x': 'finished',23 },24 };2526 await dynamoDb.update(dynamoParams).promise(); // updates status of the video27 console.log('Successfully updated video status');2829 const cloudfrontParams = {30 DistributionId: process.env.CLOUDFRONT_ID,31 InvalidationBatch: {32 CallerReference: Date.now().toString(),33 Paths: {34 Quantity: 1,35 Items: [`/${id}/*`],36 },37 },38 };3940 await cloudfront.createInvalidation(cloudfrontParams).promise(); // invalidates cloudfront distribution41 console.log('cloudfront invalidated');42 } catch (err) {43 console.error(err);44 }45};4647module.exports = {48 handler: finalize,49};

At this point, the video is ready to be streamed by the client using videojs library.

index.html

1<!DOCTYPE html>Copy2<html lang="en">34<head>5 <meta charset="UTF-8">6 <meta http-equiv="X-UA-Compatible" content="IE=edge">7 <meta name="viewport" content="width=device-width, initial-scale=1.0">8 <link href="https://vjs.zencdn.net/7.18.1/video-js.css" rel="stylesheet" />9 <link href="./css/style.css" rel="stylesheet" />10 <title>Serverless video processing using aws and hls</title>11</head>1213<body>14 <div class="upload">15 <form method="post" style="margin-bottom: 100px;">16 <label>video title:<input type="text" name="title" /></label>17 <input type="file" name="file" accept="video/*" />18 <p id="upload-status"></p>19 <button type="submit">upload</button>20 </form>21 </div>22 <div id="videos-container">23 <!-- call getVideos lambda function and insert videos using js-->24 </div>25</body>26<script src="https://vjs.zencdn.net/7.18.1/video.min.js"></script>27<script src="js/videojs-contrib-quality-levels.min.js"></script>28<script src="js/videojs-hls-quality-selector.min.js"></script>29<script src="js/app.js"></script>3031</html>

app.js

1// elementsCopy2const form = document.querySelector('form');3const file = document.querySelector('input[name="file"]');4const title = document.querySelector('input[name="title"]');5const videosContainer = document.getElementById('videos-container');6const uploadStatus = document.getElementById('upload-status');7// functions8async function handleSubmit(e) {9 // runs when you click upload button10 e.preventDefault();1112 if (!file.files.length) {13 alert('please select a file');14 return;15 }1617 if (!title.value) {18 alert('please enter a title');19 return;20 }2122 try {23 const {24 data: { url, fields },25 } = await fetch('https://ve2odyhnhg.execute-api.ap-south-1.amazonaws.com/getpresignedurl', {26 // your url might be different from this, replace it with api gateway endpoint of your lambda function27 method: 'POST',28 headers: {29 'Content-Type': 'application/json',30 Accept: 'application/json',31 },32 body: JSON.stringify({ title: title.value }), // send other metadata you want here33 }).then(res => res.json());3435 const data = {36 bucket: 'video-intake',37 ...fields,38 'Content-Type': file.files[0].type,39 file: file.files[0],40 };4142 const formData = new FormData();4344 for (const name in data) {45 formData.append(name, data[name]);46 }4748 uploadStatus.textContent = 'uploading...';49 await fetch(url, {50 method: 'POST',51 body: formData,52 });53 uploadStatus.textContent = 'successfully uploaded';54 } catch (err) {55 console.error(err);56 }57}5859async function displayAllVideos() {60 const videos = await fetch('https://ve2odyhnhg.execute-api.ap-south-1.amazonaws.com/getVideos').then(res => res.json());6162 console.log(videos);6364 videos.forEach(video => {65 var html;66 if (video.status === 'processing') {67 html = `<div class="video">68 <h3 class="title">${video.title}</h3>69 <div class="spinner-container">70 <div id="loading"></div>71 <p>Processing...</p>72 </div>73 </div>`;74 videosContainer.insertAdjacentHTML('beforeend', html);75 } else {76 html = `<div class="video">77 <h3 class="title">${video.title}</h3>78 <video id="video-${video.id}" class="video-js" data-setup='{}' controls width="500" height="300">79 <source src="${video.url}" type="application/x-mpegURL">80</video>81 </div>`;82 videosContainer.insertAdjacentHTML('beforeend', html);83 const player = videojs(`video-${video.id}`);84 player.hlsQualitySelector({85 displayCurrentQuality: true,86 });87 }88 });89}9091// listeners92form.addEventListener('submit', e => handleSubmit(e));9394//function calls95displayAllVideos();

The source type of the

<source>tag should be application/x-mpegURL for HLS streaming

Also note that as of writing, videojs do not provide the functionality to change streaming quality out of the box and we have to use videojs-contrib-quality-levels and videojs-hls-quality-selector plugins.

serverless.yaml configuration file for this architecture can be found here. clone this repo and run sls deploy to test it out.

Limitations of this approach

Even though this approach has less overhead due to the serverless nature, there are certain Limitations.

- As of writing, the maximum timeout duration of a lambda function is 15 mins. So the video processing jobs have to finish within 15 minutes.

- As of writing, the maximum memory that can be allocated to a lambda function is 10gb and vcpu cores would be allocated proportional to the memory. for example, a lambda function with 10gb of memory allocated will have 6 vcpu cores. For small to medium workloads, 6 vcpu's is enough to finish the job within 15 minutes. But for high-resolution lengthy videos, the lambda function may timeout.

I hope you get some idea about video processing and streaming on the web through this post and if you have any doubts or queries about the implementation details of the architecture, drop me a comment below.