Single thread vs child process vs worker threads vs cluster in nodejs

If you want to directly jump to the comparison click here. If you want to know more about why these three modules exist and the problems they solve in node js, read the problem section below.

The problem

Doing Input-Output bound operations such as responding to an Http request, talking to a database, talking to other servers are the areas where a Nodejs application shines. This is because of its single-threaded nature which makes it possible to handle many requests quickly with low system resource consumption. But Doing CPU bound operations like calculating the Fibonacci of a number or checking if a number is prime or not or heavy machine learning stuff is gonna make the application struggle because node only uses a single core of your CPU no matter how many cores you have.

If we are running this heavy CPU bound operation in the context of a web application,the single thread of node will be blocked and hence the webserver won't be able to respond to any request because it is busy calculating our big Fibonacci or something.

Allow me to demonstrate an example of this behavior using an express server.

All examples provided below will be in the context of a web application, but the same logic applies for any kind of nodejs application.

server.js

1const express = require("express")Copy2const app = express()34app.get("/getfibonacci", (req, res) => {5 const startTime = new Date()6 const result = fibonacci(parseInt(req.query.number)) //parseInt is for converting string to number7 const endTime = new Date()8 res.json({9 number: parseInt(req.query.number),10 fibonacci: result,11 time: endTime.getTime() - startTime.getTime() + "ms",12 })13})1415const fibonacci = n => {16 if (n <= 1) {17 return 118 }1920 return fibonacci(n - 1) + fibonacci(n - 2)21}2223app.listen(3000, () => console.log("listening on port 3000"))

We are using simple CPU intensive tasks such as finding Fibonacci here for the sake of simplicity. In a real-case scenario it may be something like compressing a video or doing machine learning operations.

Wait, Cant Promises solve this problem?

This may be a stupid doubt but For a brief moment, while I was researching for this article, I thought "Isnt promises supposed to solve this problem?, Isnt promises supposed to unblock stuff by doing things asynchronously?". Well yes, but no.

Let us take a look to the same problem but with promises (we are going to use a prime or not function this time because using promises in a recursive function can get messy).

serverWithPromises.js

1const express = require("express")Copy2const app = express()34app.get("/isprime", async (req, res) => {5 const startTime = new Date()6 const result = await isPrime(parseInt(req.query.number)) //parseInt is for converting string to number7 const endTime = new Date()8 res.json({9 number: parseInt(req.query.number),10 isprime: result,11 time: endTime.getTime() - startTime.getTime() + "ms",12 })13})1415app.get("/testrequest", (req, res) => {16 res.send("I am unblocked now")17})1819const isPrime = number => {20 return new Promise(resolve => {21 let isPrime = true22 for (let i = 3; i < number; i++) {23 if (number % i === 0) {24 isPrime = false25 break26 }27 }2829 resolve(isPrime)30 })31}3233app.listen(3000, () => console.log("listening on port 3000"))

The reason for this is that even though promises are being run asynchronously the promise executor function(our prime or not function) is called synchronously and will block our app. The reason why promises are glorified in the javascript community as a way to do "asynchronous non-blocking operations" is because they are good at doing jobs that take more time, but not more CPU power. By "doing jobs that take more time" I meant jobs like talking to a database, talking to another server, etc which is 99% of what web servers do. These jobs are not immediate and will take relatively more time. Javascript promises accomplish this by pushing the job to a special queue and listening for an event (like a database has returned with data) to happen and do a function (often referred to as a "callback function") when that event has happened. but hey, won't another thread will be required to listen for that event? , Yes it does. How node manages this queues,events and listening threads internally can be a separate article of itself .

Let us see the asynchronous non-blocking operations in action.

asyncServer.js

1const express = require("express")Copy2const app = express()3const fetch = require("node-fetch") //node-fetch is a library used to make http request in nodejs.45app.get("/calltoslowserver", async (req, res) => {6 const result = await fetch("http://localhost:5000/slowrequest") //fetch returns a promise7 const resJson = await result.json()8 res.json(resJson)9})1011app.get("/testrequest", (req, res) => {12 res.send("I am unblocked now")13})1415app.listen(4000, () => console.log("listening on port 4000"))

slowServer.js

1const express = require("express")Copy2const app = express()34app.get("/slowrequest", (req, res) => {5 setTimeout(() => res.json({ message: "sry i was late" }), 10000) //setTimeout is used to mock a network delay of 10 seconds6})78app.listen(5000, () => console.log("listening on port 5000"))

We can see that every other request is not blocked, even though the call to the slow server is taking too long. This is because the fetch function by node-fetch returns a promise. This single-threaded, non-blocking, asynchronous way of doing things is default in nodejs.

The solution

Node js provides three solutions for solving this problem

- child processes

- cluster

- worker threads

child processes

The child_process module provides the ability to spawn new processes which has their own

memory. The communication between these processes is established through

IPC (inter-process communication) provided by the operating system.

There are mainly three methods inside this module that we care about.

child_process.spawn()child_process.fork()child_process.exec()

child_process.spawn()

This method is used to spawn a child process asynchronously. This child process can be any command that can be run from a terminal.

spawn takes the following syntax:- spawn("comand to run","array of arguments",optionsObject)

The optionsObject have a variety of settings which can be found in official nodejs documentation.

The code below spawns an ls (list directory) process with arguments

-lash and the directory name from query strings and sends its output back.

childspawnServer.js

1const express = require("express")Copy2const app = express()3const { spawn } = require("child_process") //equal to const spawn = require('child_process').spawn45app.get("/ls", (req, res) => {6 const ls = spawn("ls", ["-lash", req.query.directory])7 ls.stdout.on("data", data => {8 //Pipe (connection) between stdin,stdout,stderr are established between the parent9 //node.js process and spawned subprocess and we can listen the data event on the stdout1011 res.write(data.toString()) //date would be coming as streams(chunks of data)12 // since res is a writable stream,we are writing to it13 })14 ls.on("close", code => {15 console.log(`child process exited with code ${code}`)16 res.end() //finally all the written streams are send back when the subprocess exit17 })18})1920app.listen(7000, () => console.log("listening on port 7000"))

Nothing is stopping us from spawning a nodejs process and doing another

task there, but fork() is a better way to do so.

child_process.fork()

child_process.fork() is specifically used to spawn new nodejs processes.

Like spawn, the returned childProcess object will have built-in IPC communication

channel that allows messages to be passed back and forth between the parent and child.

fork takes the following syntax:- fork("path to module","array of arguments","optionsObject")

The optionsObject have a variety of settings which can be found in official nodejs documentation.

Using fork(), we can solve the problem discussed above by forking a separate nodejs process and executing the function in that process and return the answer to the parent process whenever it is done. In that way, the parent process won't be blocked and can continue responding to requests.

childforkServer.js

1const express = require("express")Copy2const app = express()3const { fork } = require("child_process")45app.get("/isprime", (req, res) => {6 const childProcess = fork("./forkedchild.js") //the first argument to fork() is the name of the js file to be run by the child process7 childProcess.send({ number: parseInt(req.query.number) }) //send method is used to send message to child process through IPC8 const startTime = new Date()9 childProcess.on("message", message => {10 //on("message") method is used to listen for messages send by the child process11 const endTime = new Date()12 res.json({13 ...message,14 time: endTime.getTime() - startTime.getTime() + "ms",15 })16 })17})1819app.get("/testrequest", (req, res) => {20 res.send("I am unblocked now")21})2223app.listen(3636, () => console.log("listening on port 3636"))

*forkedchild.js

1process.on("message", message => {Copy2 //child process is listening for messages by the parent process3 const result = isPrime(message.number)4 process.send(result)5 process.exit() // make sure to use exit() to prevent orphaned processes6})78function isPrime(number) {9 let isPrime = true1011 for (let i = 3; i < number; i++) {12 if (number % i === 0) {13 isPrime = false14 break15 }16 }1718 return {19 number: number,20 isPrime: isPrime,21 }22}

Caveats

- Separate memory is allocated for each child process which means that there is a time and resource overhead.

Worker threads

Essentially the difference between worker threads and child processes is same as the difference between a thread and a process.Ideally, the number of threads created should be equal to number of cpu cores.

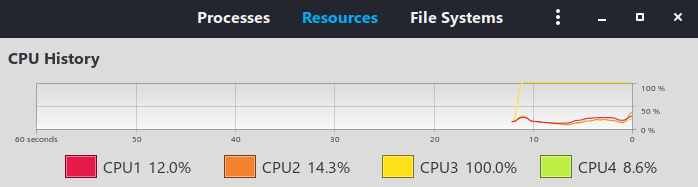

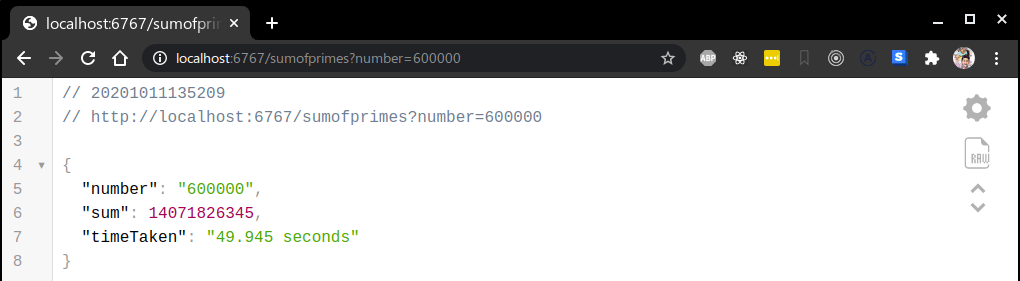

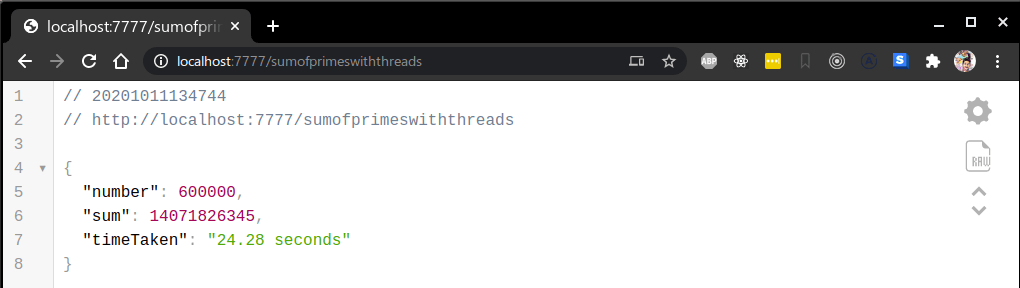

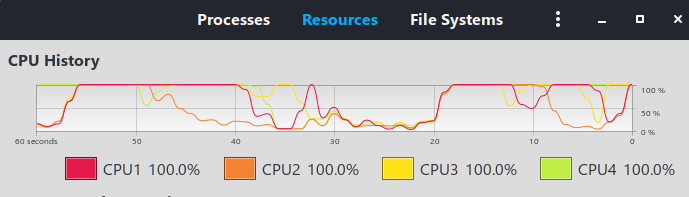

let's compare the default single thread and multi thread with worker threads performance.

singleThreadServer.js

1const express = require("express")Copy2const app = express()34function sumOfPrimes(n) {5 var sum = 06 for (var i = 2; i <= n; i++) {7 for (var j = 2; j <= i / 2; j++) {8 if (i % j == 0) {9 break10 }11 }12 if (j > i / 2) {13 sum += i14 }15 }16 return sum17}1819app.get("/sumofprimes", (req, res) => {20 const startTime = new Date().getTime()21 const sum = sumOfPrimes(req.query.number)22 const endTime = new Date().getTime()23 res.json({24 number: req.query.number,25 sum: sum,26 timeTaken: (endTime - startTime) / 1000 + " seconds",27 })28})2930app.listen(6767, () => console.log("listening on port 6767"))

sumOfPrimesWorker.js

1const { workerData, parentPort } = require("worker_threads")Copy2//workerData will be the second argument of the Worker constructor in multiThreadServer.js34const start = workerData.start5const end = workerData.end67var sum = 08for (var i = start; i <= end; i++) {9 for (var j = 2; j <= i / 2; j++) {10 if (i % j == 0) {11 break12 }13 }14 if (j > i / 2) {15 sum += i16 }17}1819parentPort.postMessage({20 //send message with the result back to the parent process21 start: start,22 end: end,23 result: sum,24})

multiThreadServer.js

1const express = require("express")Copy2const app = express()3const { Worker } = require("worker_threads")45function runWorker(workerData) {6 return new Promise((resolve, reject) => {7 //first argument is filename of the worker8 const worker = new Worker("./sumOfPrimesWorker.js", {9 workerData,10 })11 worker.on("message", resolve) //This promise is gonna resolve when messages comes back from the worker thread12 worker.on("error", reject)13 worker.on("exit", code => {14 if (code !== 0) {15 reject(new Error(`Worker stopped with exit code ${code}`))16 }17 })18 })19}2021function divideWorkAndGetSum() {22 // we are hardcoding the value 600000 for simplicity and dividing it23 //into 4 equal parts2425 const start1 = 226 const end1 = 15000027 const start2 = 15000128 const end2 = 30000029 const start3 = 30000130 const end3 = 45000031 const start4 = 45000132 const end4 = 60000033 //allocating each worker seperate parts34 const worker1 = runWorker({ start: start1, end: end1 })35 const worker2 = runWorker({ start: start2, end: end2 })36 const worker3 = runWorker({ start: start3, end: end3 })37 const worker4 = runWorker({ start: start4, end: end4 })38 //Promise.all resolve only when all the promises inside the array has resolved39 return Promise.all([worker1, worker2, worker3, worker4])40}4142app.get("/sumofprimeswiththreads", async (req, res) => {43 const startTime = new Date().getTime()44 const sum = await divideWorkAndGetSum()45 .then(46 (47 values //values is an array containing all the resolved values48 ) => values.reduce((accumulator, part) => accumulator + part.result, 0) //reduce is used to sum all the results from the workers49 )50 .then(finalAnswer => finalAnswer)5152 const endTime = new Date().getTime()53 res.json({54 number: 600000,55 sum: sum,56 timeTaken: (endTime - startTime) / 1000 + " seconds",57 })58})5960app.listen(7777, () => console.log("listening on port 7777"))

This is because, we are dividing the work into 4 equal parts and allocating each part to a worker and parallelly (at the same time) executing the task.

Cluster

Cluster is mainly used for vertically (adding more power to your existing machine) scale your nodejs web server. It is built on top of the child_process module. In an Http server, the cluster module uses child_process.fork() to automatically fork processes and sets up a master-slave architecture where the parent process distributes the incoming request to the child processes in a round-robin fashion. Ideally, the number of processes forked should be equal to the number of cpu cores your machine has.

let's build an express server using the cluster module.

1const cluster = require("cluster")Copy2const http = require("http")3const cpuCount = require("os").cpus().length //returns no of cores our cpu have45if (cluster.isMaster) {6 masterProcess()7} else {8 childProcess()9}1011function masterProcess() {12 console.log(`Master process ${process.pid} is running`)1314 //fork workers.1516 for (let i = 0; i < cpuCount; i++) {17 console.log(`Forking process number ${i}...`)18 cluster.fork() //creates new node js processes19 }20 cluster.on("exit", (worker, code, signal) => {21 console.log(`worker ${worker.process.pid} died`)22 cluster.fork() //forks a new process if any process dies23 })24}2526function childProcess() {27 const express = require("express")28 const app = express()29 //workers can share TCP connection3031 app.get("/", (req, res) => {32 res.send(`hello from server ${process.pid}`)33 })3435 app.listen(5555, () =>36 console.log(`server ${process.pid} listening on port 5555`)37 )38}

When we run the code above, what happens is that for the very first time cluster.isMaster

will be true and masterProcess() function is executed. This function forks 4 nodejs processes (i have 4 cores in my cpu) and

whenever another process is forked, the same file is run again but this time cluster.isMaster will be

false because the process is now a child process since it is forked. So the control goes to the else condition. As a result,

the childProcess() function is executed 4 times and 4 instances of an express server are created. Subsequent request are distributed to the

four servers in a round-robin fashion. This helps us to use 100% of our cpu. The node js documentation

also says that there are some built-in smarts to avoid overloading a worker process.

The cluster module is the easiest and fastest way to vertically scale a simple nodejs server. For more advanced and elastic scaling, tools like docker containers and Kubernetes are used.

Conclusion

Even though Node js provides great support for multi-threading, that doesn't necessarily mean we should always make our web applications multi-threaded. Node js is built in such a way that the default single-threaded behavior is preferred over the multi-threaded behaviour for web-servers because web-servers tend to be IO-bound and nodejs is great for handling asynchronous IO operations with minimal system resources and Nodejs is famous for this feature. The extra overhead and complexity of another thread or process makes it really difficult for a programmer to work with simple IO tasks. But there are some cases where a web server does CPU bound operations and in such cases, it is really easy to spin up a worker thread or child process and delegate that task. So, our design architecture really boils down to our application's need and requirements and we should make decisions based on that.

Thanks for reading and please don't hesitate to ask any doubt in the comment section below.